Key Points

- Know the importance of training, validation, and test sets to prevent bias

- The difference between random and real effects that can impact performance

- How to split data to measure different model performance

- k-Fold Cross-validation for training and validation

Introduction

In data science, model validation is a process to measure the machine learning reliability to accurately predict the outcome while preventing possible biases. This process includes using different sets for training the model and another portion for validation and testing. That said, splitting the dataset into a different portion for each aspect of the said phase (training, validation, and test) is the law to properly build a good model, and make judgments about its accuracy. This will also combat having a too-optimistic result such as high prediction accuracy due to patterns caused by random effects from the training data.

There are two types of patterns. There is a real effect, which is derived from the real relationship between attributes and the response. There is a random effect, which seems to be real but actually just a random chance. However, it is important to note that when fitting a model from a training set, both the real effect and random effect are present. This is because the former (real effect) is the same for all datasets while the latter (random effect) is different for all datasets. This also means that when a different data (new | test data) is used, only the real effect is present, and the random effect is different which leads the model to have a lower performance result versus when it is in the training set.

Example Scenario

Let’s say that I am investigating the top 10 log of session counts in my Porta SFTP Server with the highest rates to possibly predict what day and when the session most occurred.

| Day | Session Rates |

| Friday | 17 |

| Monday | 11 |

| Saturday | 8 |

| Sunday | 9 |

| Thursday | 7 |

| Tuesday | 10 |

| Wednesday | 4 |

| Friday | 13 |

| Monday | 9 |

| Saturday | 5 |

Imagine that the aggregated data from the table is affected by e.g. business partners/associates (clients) demands (urges) to login to the SFTP Server during the week. A reason could be that in the recent week, different clients weren’t able to log in to the server due to SFTP Server downtime, etc., which resulting those session counts rising the following day (happened by random chance). That said, this is why the same data cannot be used for both training and testing to counteract the random effect.

Measuring Model Performance

If you only measuring one model (not measuring / comparing different types of models), you only need two sets. A larger set to fit the model and the smaller set to measure its effectiveness.

- The 90 percent will be used to fit the model; and

- The 80 percent will be used to validate the effectiveness.

Thus, this will help to make a judgment on the accuracy or performance of the model. If there is any uniquely random effect that is present inherited from the training set, this will not be counted as a benefit as we only use the 80% for the validation to measure its effectiveness.

Comparing & Choosing Model

Suppose you build different models using the common classifier machine learning algorithm Support Vector Machine (SVM) and K-Nearest Neighbors (KNN).

Sample of 4 Models Performance from KNN and SVM

| Model 1 | Model 2 | Model 3 | Model 4 | |

| SVM | 94/100 | 98/100 | 90/100 | 90/100 |

| KNN | 92/100 | 94/100 | 87/100 | 95/100 |

The answer is no. While using Training and Validation sets alone can be reasonably fine when estimating just one model’s accuracy, comparing different models is not. This is because it is unavoidable that the observed accuracy from the high-performing model is equated with the real quality + random effects. This means that the model is most likely to have above-average random effects which leads to being too optimistic.

Sample table of Models with Real and Random effect

| Model | Real Effects | Random Effects | Total |

| SVM – 1 | 92 | +2 | 94 |

| SVM – 2 | 93 | +5 | 98 – Inflated by Random Effects |

| SVM – 3 | 83 | +1 | 84 |

| SVM – 4 | 93 | -3 | 90 |

| KNN – 1 | 94 | -2 | 92 |

| KNN – 2 | 91 | +3 | 94 |

| KNN – 3 | 92 | -5 | 87 |

| KNN – 4 | 94 | +0 | 94 |

This table shows that the random effect can make the model look better than it really is, and or sometimes it can make look worse than it really is.

Introducing Third Set of Data

To circumvent this issue, we need to have another set of data (third set) to check the performance of the chosen model after choosing it from the Validation set.

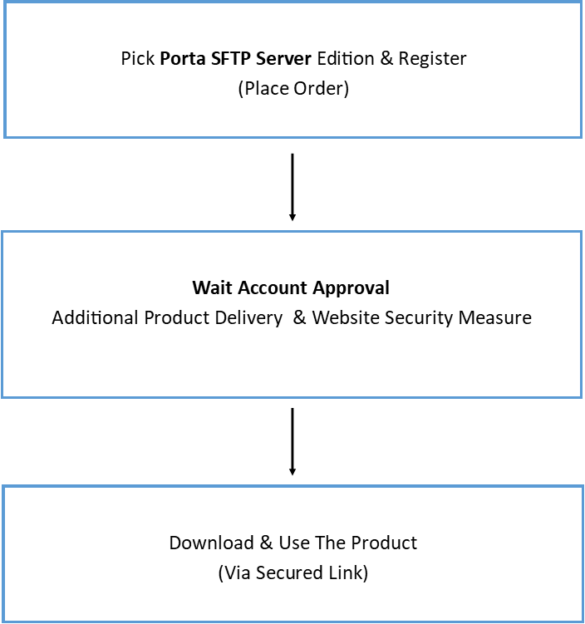

Flow Chart Guide

- Training Set – for building a model

- Validation Set – choosing the best model; and

- Test Set – for estimating the performance of the chosen model

Splitting Data

When building a model, the training set should always be larger in general to let the model to fit and capture most important data as much as possible. On the other hand, the testing should have enough data along with some of the important data.

One model

- 70-90% for training set and 10-30% for the testing set

Comparing model

- 50-70% for training set and the remaining will be divided for the validation and test set

When splitting data into different sets, it is important to note that randomization or rotation for selecting data points for each set can really be helpful to approximately equalize the distribution of important information across sets. This means that training data should have some (if not most) of the important data points that are commonly shared with validation data and/ or test data. However, be careful as like any other techniques, randomization and rotation alone are also vulnerable to introducing selection bias which impacts model accuracy. To minimize (counteract) this chance, use both this technique (combination).

k-Fold Cross Validation

In splitting data, there is always a chance of a huge skewness distribution of important data. For example, what if the important data is only present in validation or test sets? This means that the training will not be fitter to important data points that are only presented to the training and test sets. Well, that’s where cross-validation comes into play.

There are several cross-validation methods in machine learning, but this post will introduce the k-fold cross-validation method. For your learning curve, here are the names of a few:

- k-Fold cross-validation

- Time series (rolling cross-validation)

- Hold-out cross-validation

- Leave-p-out cross-validation

- Leave-one-out cross-validation

- Stratified k-fold cross-validation

- Monte Carlo (shuffle-split)

Remember that all of these have one goal to combat selection bias, help evaluate how the model will generalize independent variables, and give estimates of how good that model performs when encountering new data points to make predictions.

k-Fold cross-validation where k is the number of different equal splits of random data coming from the training sets. However, we need to leave one set from that dataset to be used in the validation. So, k-1=number of splits and minus 1 to leave the validation therefore k-1. And to visualize:

| ITERATIONS | FOLD 1 | FOLD 2 | FOLD 3 | FOLD 4 | FOLD 5 |

| 1 | Validation | Training | Training | Training | Training |

| 2 | Training | Validation | Training | Training | Training |

| 3 | Training | Training | Validation | Training | Training |

| 4 | Training | Training | Training | Validation | Training |

| 5 | Training | Training | Training | Training | Validation |

Note. The table shows k=5 minus 1 (5-1) to leave one for the validation. This is called 5-fold cross-validation.

As you can see in the table above, the 4 sets of splits go to training and 1 set for the validation and continue to rotate in order for the next iteration.

Possible Questions

Can the best-performing model from k-fold cross-validation be enough to use? The answer is no, although you can average the different model performances to get an idea of how well the model is, this will not guarantee to perform well in new data. Instead, you have to use all of the training sets (k) to fit the model.

What is the best way to determine the value number of set/split (k)? There is no best way to determine or standard value of k but the most common is 10.

To learn more about the math behind cross-validation please visit An Introduction to Statistical Learning (statlearning.com) chapter 5.

Conclusion

In the field of data science, there are so many ways to give solutions to the same problems, and learning is the key to innovation. This post shows only the basic general idea of how important the validation and testing phase is after the model is fitted from the training sets. While it is true, this post also shows the different splitting techniques for the dataset to minimize bias. That said, it is in conjunction with the bias/variance tradeoffs to achieve the optimal model prediction accuracy.

I hope you enjoy reading this post. Please subscribe to the mailing list as there will be coding in R and or Python for these different techniques, to see it in action.